PSET4 - Arpeggiator

README

In Lecture, we introduced a GM (General Midi) Synthesizer called FluidSynth to give us a larger palette of instrumental sounds than sine waves. We also laid the foundation for generating rhythms by creating Clock, SimpleTempoMap, and Scheduler classes. We tested the results with a Metronome and Sequencer class. In this assignment, we were asked to create an Arpeggiator. For the first part of the assignment, I created an arpreggiator and some graphical elements for intuitive use. For the second part of the assignment, we were asked to make an interactive song. I interpretted that in a unique way that differentiated my implementation from the rest of the class.

SongBird

Since I had already done a simple GUI/mapping for part one of the PSET, I wanted to try something a little more complex and creative. This portion of the PSET was inspired by a project my professor had consulted on for Walt Disney Imagineering. In the Imagination Pavillion at Epcot, there is a musical interactive where guests can control Figment to play music. I reinterpretted that as a collaborative musical conversation with a bird. The song playing is ‘Zip-A-Dee-Doo-Dah’ and the interactive component is the blue bird flying around the scene. The bird flies towards user’s mouse. Depending on how fast the bird is flying (which is a function of how far away the bird is from the mouse), the arpeggio rhythm will change, the bird will fly faster, and the music notes will adjust their size. Additionally, the pitch octave is adjusted based on which portion of the screen the bird is flying in, and the bird’s channel pans left and right. The arpeggiated chords for the bird’s song change to match the current chord in the song. I later reimplemented this assignment to work on the 8K screen in our lab and added another song. The second song is 'Rockin' Robin' and the bird is red! :)

NoteBlocks

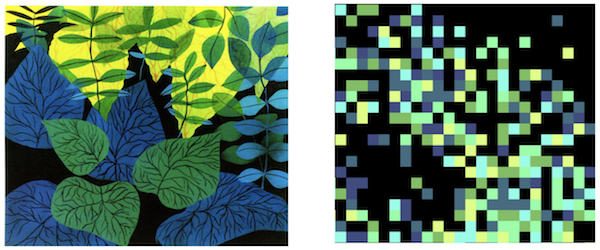

I wanted to use our newly developed synthesizer skills to upgrade my BubbleWrap game from last week. Wanting to improve the aesthetic and the tonal quality, I opted for multicolor squares that fill the scene instead of bubbles. I was intentional with my color scheme, trying to match the color style of Mary Blair. However, because I wanted each level to be randomly generated, I created hue and brightness variables that attempt to match the style.

For the tonal enhancement, when moving the cursor around the scene, the synth channels pan between the left and right speaker. Additionally, the tones are mapped to each block color for consistency. Higher pitches are on the right side of the screen. Lastly, if there are more than 3 scheduled commands (i.e. user is moving the cursor quickly), all the pitches are increased by an octave.