Reality, Virtually Hackathon

INSPIRATION

When assessing the affordances of augmented reality, we wanted to take advantage of how sound changes temporally in a physical environment. Our team wanted to explore character development, musical composition, and phone position to create a unique experience for many user types. We wanted our app to appeal to children and adults, novices and experts. Our team comes from very diverse backgrounds and brought different levels of skills to the hackathon that helped us create something better than any of us could create on our own. Our team members included:

Emily Salvador (Team Lead, Synthesizer Lead, 2D Asset Designer) Emily is currently a first year masters student at the MIT Media Lab, in the Object Based Media group. She majored in computer science and music in undergrad. She wanted to create something musical that would be simple and quick to create interesting compositions and spacial soundscapes. During her time working at Walt Disney Imagineering and Universal Creative, she wanted to explore how personal devices can take advantage of information embedded in their physical environment. In her free time, she loves trying all the new filters on Snapchat.

Yichao Guan (Sound Designer) Yichao is currently a graduate from Berklee college of music, with a double major in Electronic Production & Design and Film Scoring. He aspires to be a composer and sound designer for games and films, but is now just a starving, struggling freelancer. Sometimes at night, he often dreams of cheap Chinese takeouts.

Vik Parthiban (Hardware Engineer, learning more about the software) Vik is a first year masters student at the MIT Media Lab also in the Object Based Media group. He did his bachelors and masters in Electrical Engineering and worked at Magic Leap building light-field displays for mixed reality. He's currently researching new holographic media for glass-less 3D displays and hopes to bring a holo-display to everyone's home in the near future. On the side, he is an advisor to for a student research group working on the Hyperloop Transportation System with SpaceX and Elon Musk.

Colin Greenhill (Software Developer, 3D Modeler and Animator) Colin is a software developer who specializes in educational games, tools and simulations. His experience teaching animation and game development at The Center for Digital Arts and the Boston Museum of Science, and his work at the Education Arcade at MIT has given him the opportunity to explore and further develop the power overlap of games and education.

Louis DeScioli (Software Engineer & Designer) Louis is a software engineer whose made everything from Internet-connected aquaponic gardens to educational apps for autistic families. He studied Electrical Engineering and Computer Science at MIT. He enjoys creating software experiences that delight and inspire. He is an aspiring augmented reality developer, making an mobile augmented reality game, Out Here Archery. In his spare time he enjoys playing his cello and cooking.

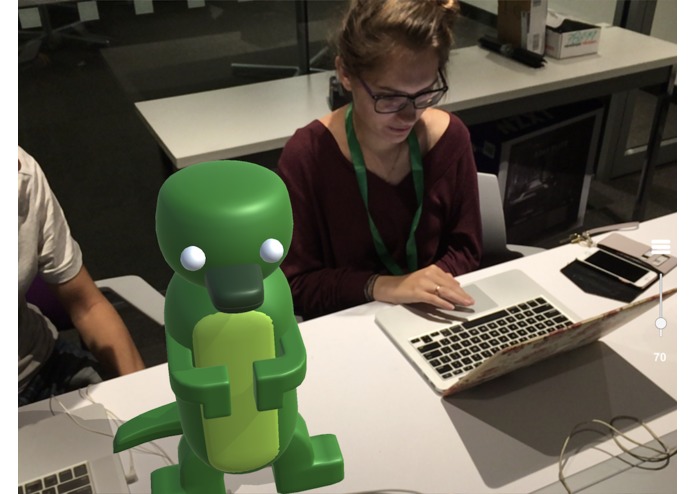

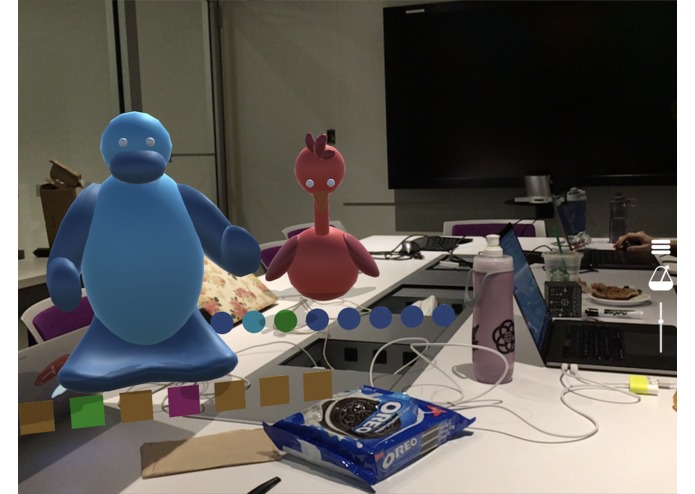

WHAT IT DOES

We've created an iPad/iPhone app that allows you to place 3D characters into your physical environment. You can interact with those characters via a sequencer to tell your characters when they should play their instrument. At the character level, you can toggle between different chords and notations to quickly create beautiful compositions. As you add more characters, your composition has more layers which increases the complexity and musicality of the piece. Because the characters are locked at a physical position, as you move around the room, the balance of the instrumentation adjusts depending on how close you are to each character. At the global level, you can change the tempo of your instruments (which also informs how fast the characters animate). Additionally, at the global level, you can enable the randomizer which will automatically create melodies and rhythmic variation for you.

HOW WE BUILT IT

We built the app in three parts. Those were character animation, synthesizer and sound design, and augmented reality tracking.

Character Animation The characters were modeled, rigged and animated in Blender, and imported to Unity3D. Each character has a distinct animation to go along with the soundscapes that the user can create.

Synthesizer and Sound Design For the synthesizer, we had three main scripts. The BeatHandler was a global script, that had a delegate called BeatAction. The tempo of game was maintained in this script, as well as calls based on the tempo. The Synthesizer script kept track of several variables for each individual track. For example, it instantiated how many cubes were in each synthesizer and how many individual sounds files could be called. The CubeController script kept track of the state of each cube and maintained what color the cube should be along with whether or not that cube should play a note (and which note it should play).

AR Tracking For the Augmented Reality tracking we used Apple's ARKit framework with the Unity ARKit plugin. The main challenge was tracking the discovered planes in the 3D scene and showing a square indicating to the user whether or not a model could be placed at that spot in the scene. Other considerations were creating shadows for the virtual objects and figuring out how to scale assets to appear at reasonable sizes.